Page 34 - Read Online

P. 34

Page 16 of 16 Zander et al. Complex Eng Syst 2023;3:9 I http://dx.doi.org/10.20517/ces.2023.11

Nural Networks and 11th WSEAS International Conference on Evolutionary Computing and 11th WSEAS International Conference on

Fuzzy Systems. NN’10/EC’10/FS’10. Stevens Point, Wisconsin, USA: World Scientific and Engineering Academy and Society (WSEAS);

2010. p. 94–98.

53. Al-Hmouz A, Shen J, Al-Hmouz R, Yan J. Modeling and simulation of an adaptive neuro-Fuzzy inference system (ANFIS) for mobile

learning. IEEE Trans Learning Technol 2012;5:226–37. DOI

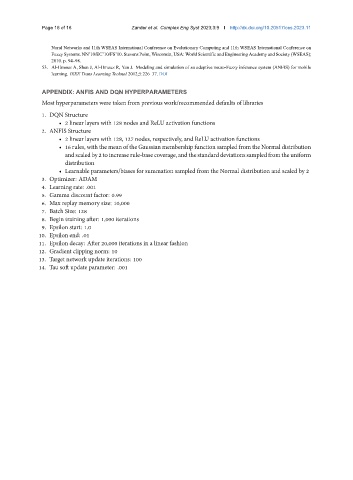

APPENDIX: ANFIS AND DQN HYPERPARAMETERS

Most hyperparameters were taken from previous work/recommended defaults of libraries

1. DQN Structure

• 2 linear layers with 128 nodes and ReLU activation functions

2. ANFIS Structure

• 2 linear layers with 128, 127 nodes, respectively, and ReLU activation functions

• 16 rules, with the mean of the Gaussian membership function sampled from the Normal distribution

andscaledby2toincreaserule-basecoverage, andthestandarddeviationssampledfromtheuniform

distribution

• Learnable parameters/biases for summation sampled from the Normal distribution and scaled by 2

3. Optimizer: ADAM

4. Learning rate: .001

5. Gamma discount factor: 0.99

6. Max replay memory size: 10,000

7. Batch Size: 128

8. Begin training after: 1,000 iterations

9. Epsilon start: 1.0

10. Epsilon end: .01

11. Epsilon decay: After 20,000 iterations in a linear fashion

12. Gradient clipping norm: 10

13. Target network update iterations: 100

14. Tau soft update parameter: .001