Page 28 - Read Online

P. 28

Page 10 of 16 Zander et al. Complex Eng Syst 2023;3:9 I http://dx.doi.org/10.20517/ces.2023.11

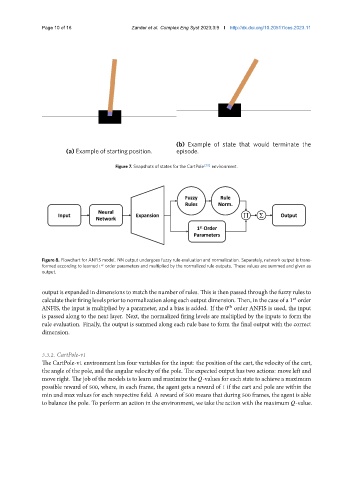

(b) Example of state that would terminate the

(a) Example of starting position. episode.

Figure 7. Snapshots of states for the CartPole [51] environment.

Figure 8. Flowchart for ANFIS model. NN output undergoes fuzzy rule evaluation and normalization. Separately, network output is trans-

formed according to learned 1 order parameters and multiplied by the normalized rule outputs. These values are summed and given as

output.

output is expanded in dimensions to match the number of rules. This is then passed through the fuzzy rules to

calculate their firing levels prior to normalization along each output dimension. Then, in the case of a 1 order

ℎ

ANFIS, the input is multiplied by a parameter, and a bias is added. If the 0 order ANFIS is used, the input

is passed along to the next layer. Next, the normalized firing levels are multiplied by the inputs to form the

rule evaluation. Finally, the output is summed along each rule base to form the final output with the correct

dimension.

3.3.2. CartPole-v1

The CartPole-v1 environment has four variables for the input: the position of the cart, the velocity of the cart,

the angle of the pole, and the angular velocity of the pole. The expected output has two actions: move left and

move right. The job of the models is to learn and maximize the -values for each state to achieve a maximum

possible reward of 500, where, in each frame, the agent gets a reward of 1 if the cart and pole are within the

min and max values for each respective field. A reward of 500 means that during 500 frames, the agent is able

to balance the pole. To perform an action in the environment, we take the action with the maximum -value.