Page 22 - Read Online

P. 22

He et al. Intell. Robot. 2025, 5(2), 313-32 I http://dx.doi.org/10.20517/ir.2025.16 Page 321

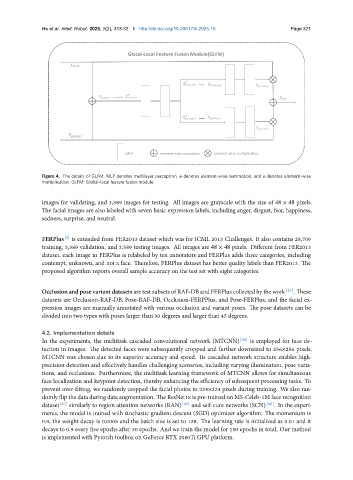

Figure 4. The details of GLFM. MLP denotes multilayer perceptron, ⊕ denotes element-wise summation, and ⊗ denotes element-wise

multiplication. GLFM: Global-local feature fusion module.

images for validating, and 3,589 images for testing. All images are grayscale with the size of 48 × 48 pixels.

The facial images are also labeled with seven basic expression labels, including anger, disgust, fear, happiness,

sadness, surprise, and neutral.

FERPlus [9] is extended from FER2013 dataset which was for ICML 2013 Challenges. It also contains 28,709

training, 3,589 validation, and 3,589 testing images. All images are 48 × 48 pixels. Different from FER2013

dataset, each image in FERPlus is relabeled by ten annotators and FERPlus adds three categories, including

contempt, unknown, and not a face. Therefore, FERPlus dataset has better quality labels than FER2013. The

proposed algorithm reports overall sample accuracy on the test set with eight categories.

Occlusion and pose variant datasets are test subsets of RAF-DB and FERPlus collected by the work [45] . These

datasets are Occlusion-RAF-DB, Pose-RAF-DB, Occlusion-FERPPlus, and Pose-FERPlus, and the facial ex-

pression images are manually annotated with various occlusion and variant poses. The pose datasets can be

divided into two types with poses larger than 30 degrees and larger than 45 degrees.

4.2. Implementation details

In the experiments, the multitask cascaded convolutional network (MTCNN) [46] is employed for face de-

tection in images. The detected faces were subsequently cropped and further downsized to 256×256 pixels.

MTCNN was chosen due to its superior accuracy and speed. Its cascaded network structure enables high-

precision detection and effectively handles challenging scenarios, including varying illumination, pose varia-

tions, and occlusions. Furthermore, the multitask learning framework of MTCNN allows for simultaneous

face localization and keypoint detection, thereby enhancing the efficiency of subsequent processing tasks. To

prevent over-fitting, we randomly cropped the facial photos to 224×224 pixels during training. We also ran-

domly flip the data during data augmentation. The ResNet18 is pre-trained on MS-Celeb-1M face recognition

dataset [47] similarly to region attention networks (RAN) [45] and self-cure networks (SCN) [48] . In the experi-

ments, the model is trained with stochastic gradient descent (SGD) optimizer algorithm. The momentum is

0.9, the weight decay is 0.0005 and the batch size is set to 128. The learning rate is initialized as 0.01 and it

decays to 0.9 every five epochs after 30 epochs. And we train the model for 150 epochs in total. Our method

is implemented with Pytorch toolbox on GeForce RTX 2080Ti GPU platform.