Page 97 - Read Online

P. 97

Zhang et al. Intell Robot 2022;2(3):27597 I http://dx.doi.org/10.20517/ir.2022.20 Page 289

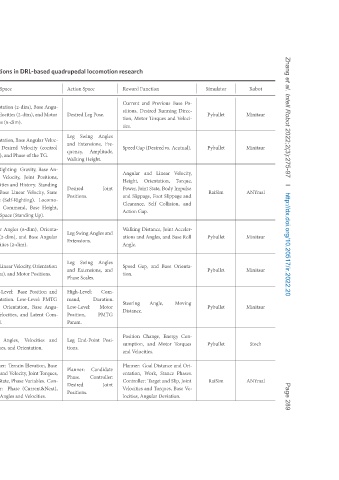

Robot Minitaur Minitaur ANYmal Minitaur Minitaur Minitaur Stoch ANYmal

Simulator Pybullet Pybullet RaiSim Pybullet Pybullet Pybullet Pybullet RaiSim

Po- Direc- Veloci- Torque, and and Acceler- Roll Moving Con- Ori- Phases. Joint Ve-

Base and Acutual). Velocity, Impulse Slippage Base Orienta- Energy Torques and Slip, Base

Previous Running Torques Linear Orientation, Body Foot Collision, Joint and Base Angle, Motor Distance Stance and Torques, Deviation.

Function and Desired Motor SpeedGap(Desiredvs. and State, Joint Slippage, Self Gap. Distance, Angles, and and Gap, Change, and Velocities. Goal Work, Target and Angular

research Reward Current sitions, tion, ties. Angular Height, Power, and Clearance, Action Walking ations Angle. Speed tion. Steering Distance. Position sumption, and Planner: entation, Controller: Velocities locities,

locomotion Space Pose. Leg Angles Swing Fre- Extensions, Amplitude, Height. Joint Leg Swing Angles and Angles Swing and Extensions, Scales. Com- Duration. Motor PMTG Posi- End-Point Candidate Controller: Joint

quadrupedal Action Desired Leg and quency, Walking An- Desired State Positions. Extensions. Leg and Phase High-Level: and mand, Low-Level: Position, Param. Leg and tions. Base Planner: Phase. Con- Desired Positions.

DRL-based Angu- Base (control Velocity TG. the of Base Gravity, Positions, Joint Standing History. Velocity, Locomo- Height, Base Up). Orienta- (8-dim), Angular Base Positions. Position PMTG Low-Level: Angu- Base Com- Latent Velocities Orientation. Elevation, Torques, Joint Variables. (Current&Next), Velocities.

in Space (2-dim), lar Velocities (2-dim), and Motor (8-dim). Orientation, Base Angular Veloc- Desired Phase and Velocity, and Linear (Self-Righting). Command, (Standing Space Angles and (2-dim), (2-dim). BaseLinearVelocity, Orientation Motor and Base Orientation, and Velocities, Angles, and Terrain Velocity, Phase Phase and Angles

publications State Orientation Angles ities, input), Self-Righting: gular Velocities Base Up: Space tion: State Motor tion Velocities (3-dim), High-Level: Orientation. State, lar mand. Joint Torques, Planner: and State State, Feet troller: Joint

relevant Algorithm PPO PPO + TRPO GAE [22] SAC CEM + ARS PPO TRPO, GAE

most MPC PPO,

the loco- algo- be- pro- to us- au- in com-

of agile DRL learning that knowl- approach maneuvers Entropy minimal neural framework from to complex learn to behaviors terrain- which

Classification design leveraging for PMTG using prior and DRL recovery controller. Max. requiring learn to tuning policies. RL locomotion only4.5minutesofdatacollected robot. framework decompose tasks. effective quadrupedal walking using DRL, these training for locomotion, binesModel-BasedPlanningand

1. Description to system by architecture by memory model-free hierarchical a sample-efficient algorithm model-based learning quadruped hierarchical tomatically locomotion is realize technique

Table A motion rithms. An haviors vides edge. A control ing A RL per-task network A for a on A kMPs and Stoch. A aware RL.

Year 2018 2018 2019 2019 2019 2019 2019 2019

Pub. RSS CoRL ArXiv RSS CoRL IROS ICRA ICRA

Locomo- Gener- a for Rein- Reinforce- Learning Loco- Kinematic of Control Rein-

Agile Robots [39] Trajectory Controller Deep using Deep Locomotion [46] Quadruped through (kMPs) [55] and Deep using

Learning Quadruped Modulating Recovery Robot Learning [48] via Walk DataEfficientReinforcementLearning Robots [99] Reinforcement Learned Behaviors Primitives Planning Gaits Learning [100]

APPENDIX Publication Sim-to-Real: For tion Policies ators [49] Robust Quadrupedal forcement to Learning Learning [98] ment Legged for Hierarchical Quadruped for Realizing motion Motion DeepGait: Quadrupedal forcement