Page 91 - Read Online

P. 91

Page 79 Shu et al. Intell Robot 2024;4(1):74-86 I http://dx.doi.org/10.20517/ir.2024.05

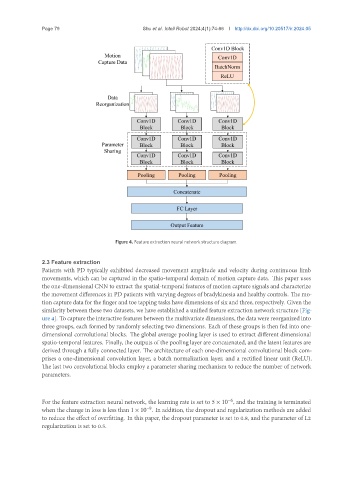

Figure 4. Feature extraction neural network structure diagram.

2.3 Feature extraction

Patients with PD typically exhibited decreased movement amplitude and velocity during continuous limb

movements, which can be captured in the spatio-temporal domain of motion capture data. This paper uses

the one-dimensional CNN to extract the spatial-temporal features of motion capture signals and characterize

the movement differences in PD patients with varying degrees of bradykinesia and healthy controls. The mo-

tion capture data for the finger and toe tapping tasks have dimensions of six and three, respectively. Given the

similarity between these two datasets, we have established a unified feature extraction network structure [Fig-

ure 4]. To capture the interactive features between the multivariate dimensions, the data were reorganized into

three groups, each formed by randomly selecting two dimensions. Each of these groups is then fed into one-

dimensional convolutional blocks. The global average pooling layer is used to extract different dimensional

spatio-temporal features. Finally, the outputs of the pooling layer are concatenated, and the latent features are

derived through a fully connected layer. The architecture of each one-dimensional convolutional block com-

prises a one-dimensional convolution layer, a batch normalization layer, and a rectified linear unit (ReLU).

The last two convolutional blocks employ a parameter sharing mechanism to reduce the number of network

parameters.

For the feature extraction neural network, the learning rate is set to 5 × 10 , and the training is terminated

−6

−9

when the change in loss is less than 1 × 10 . In addition, the dropout and regularization methods are added

to reduce the effect of overfitting. In this paper, the dropout parameter is set to 0.8, and the parameter of L2

regularization is set to 0.5.