Page 15 - Read Online

P. 15

Ji et al. Intell Robot 2021;1(2):151-75 https://dx.doi.org/10.20517/ir.2021.14 Page 159

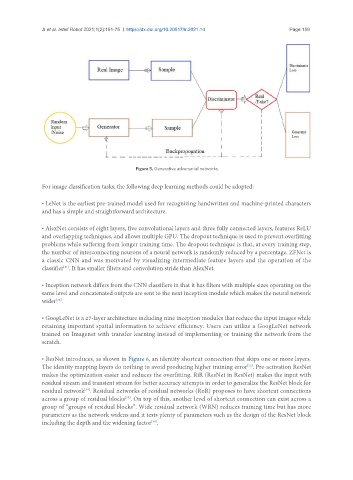

Figure 5. Generative adversarial networks.

For image classification tasks, the following deep learning methods could be adopted:

• LeNet is the earliest pre-trained model used for recognizing handwritten and machine-printed characters

and has a simple and straightforward architecture.

• AlexNet consists of eight layers, five convolutional layers and three fully connected layers, features ReLU

and overlapping techniques, and allows multiple GPU. The dropout technique is used to prevent overfitting

problems while suffering from longer training time. The dropout technique is that, at every training step,

the number of interconnecting neurons of a neural network is randomly reduced by a percentage. ZFNet is

a classic CNN and was motivated by visualizing intermediate feature layers and the operation of the

[31]

classifier . It has smaller filters and convolution stride than AlexNet.

• Inception network differs from the CNN classifiers in that it has filters with multiple sizes operating on the

same level and concatenated outputs are sent to the next inception module which makes the neural network

wider .

[32]

• GoogLeNet is a 27-layer architecture including nine inception modules that reduce the input images while

retaining important spatial information to achieve efficiency. Users can utilize a GoogLeNet network

trained on Imagenet with transfer learning instead of implementing or training the network from the

scratch.

• ResNet introduces, as shown in Figure 6, an identity shortcut connection that skips one or more layers.

[33]

The identity mapping layers do nothing to avoid producing higher training error . Pre-activation ResNet

makes the optimization easier and reduces the overfitting. RiR (ResNet in ResNet) makes the input with

residual stream and transient stream for better accuracy attempts in order to generalize the ResNet block for

residual network . Residual networks of residual networks (RoR) proposes to have shortcut connections

[34]

[35]

across a group of residual blocks . On top of this, another level of shortcut connection can exist across a

group of “groups of residual blocks”. Wide residual network (WRN) reduces training time but has more

parameters as the network widens and it tests plenty of parameters such as the design of the ResNet block

[36]

including the depth and the widening factor .