Page 35 - Read Online

P. 35

Page 4 of 10 Bao et al. Complex Eng Syst 2022;2:16 I http://dx.doi.org/10.20517/ces.2022.30

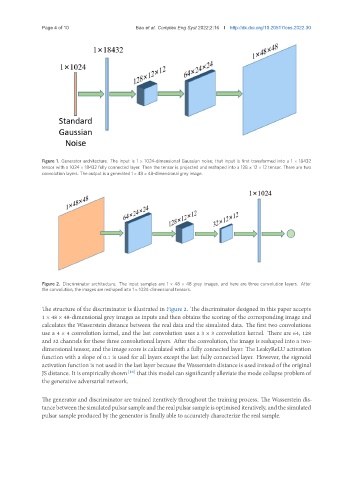

Figure 1. Generator architecture. The input is 1 × 1024-dimensional Gaussian noise; that input is first transformed into a 1 × 18432

tensor with a 1024 × 18432 fully connected layer. Then the tensor is projected and reshaped into a 128 × 12 × 12 tensor. There are two

convolution layers. The output is a generated 1 × 48 × 48-dimensional grey image.

Figure 2. Discriminator architecture. The input samples are 1 × 48 × 48 grey images, and here are three convolution layers. After

the convolution, the images are reshaped into 1 × 1024-dimensional tensors.

The structure of the discriminator is illustrated in Figure 2. The discriminator designed in this paper accepts

1 × 48 × 48-dimensional grey images as inputs and then obtains the scoring of the corresponding image and

calculates the Wasserstein distance between the real data and the simulated data. The first two convolutions

use a 4 × 4 convolution kernel, and the last convolution uses a 3 × 3 convolution kernel. There are 64, 128

and 32 channels for these three convolutional layers. After the convolution, the image is reshaped into a two-

dimensional tensor, and the image score is calculated with a fully connected layer. The LeakyReLU activation

function with a slope of 0.1 is used for all layers except the last fully connected layer. However, the sigmoid

activation function is not used in the last layer because the Wasserstein distance is used instead of the original

JS distance. It is empirically shown [16] that this model can significantly alleviate the mode collapse problem of

the generative adversarial network.

The generator and discriminator are trained iteratively throughout the training process. The Wasserstein dis-

tancebetweenthesimulatedpulsarsampleandtherealpulsarsampleisoptimisediteratively,andthesimulated

pulsar sample produced by the generator is finally able to accurately characterize the real sample.