Page 31 - Read Online

P. 31

Qi et al. Intell Robot 2021;1(1):18-57 I http://dx.doi.org/10.20517/ir.2021.02 Page 26

Features

Training Data

Labels Labels

Federated Transfer Learning

New Features

Labels

Features

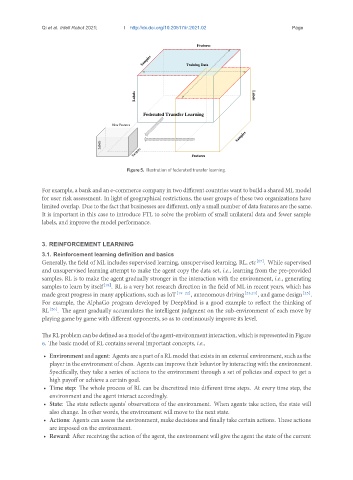

Figure 5. Illustration of federated transfer learning.

For example, a bank and an e-commerce company in two different countries want to build a shared ML model

for user risk assessment. In light of geographical restrictions, the user groups of these two organizations have

limited overlap. Due to the fact that businesses are different, only a small number of data features are the same.

It is important in this case to introduce FTL to solve the problem of small unilateral data and fewer sample

labels, and improve the model performance.

3. REINFORCEMENT LEARNING

3.1. Reinforcement learning definition and basics

Generally, the field of ML includes supervised learning, unsupervised learning, RL, etc [17] . While supervised

and unsupervised learning attempt to make the agent copy the data set, i.e., learning from the pre-provided

samples, RL is to make the agent gradually stronger in the interaction with the environment, i.e., generating

samples to learn by itself [18] . RL is a very hot research direction in the field of ML in recent years, which has

made great progress in many applications, such as IoT [19–22] , autonomous driving [23,24] , and game design [25] .

For example, the AlphaGo program developed by DeepMind is a good example to reflect the thinking of

RL [26] . The agent gradually accumulates the intelligent judgment on the sub-environment of each move by

playing game by game with different opponents, so as to continuously improve its level.

TheRLproblemcanbedefinedasamodeloftheagent-environmentinteraction,whichisrepresentedinFigure

6. The basic model of RL contains several important concepts, i.e.,

• Environment and agent: Agents are a part of a RL model that exists in an external environment, such as the

player in the environment of chess. Agents can improve their behavior by interacting with the environment.

Specifically, they take a series of actions to the environment through a set of policies and expect to get a

high payoff or achieve a certain goal.

• Time step: The whole process of RL can be discretized into different time steps. At every time step, the

environment and the agent interact accordingly.

• State: The state reflects agents’ observations of the environment. When agents take action, the state will

also change. In other words, the environment will move to the next state.

• Actions: Agents can assess the environment, make decisions and finally take certain actions. These actions

are imposed on the environment.

• Reward: After receiving the action of the agent, the environment will give the agent the state of the current