Page 23 - Read Online

P. 23

Page 4 of 22 Ernest et al. Complex Eng Syst 2023;3:4 I http://dx.doi.org/10.20517/ces.2022.54

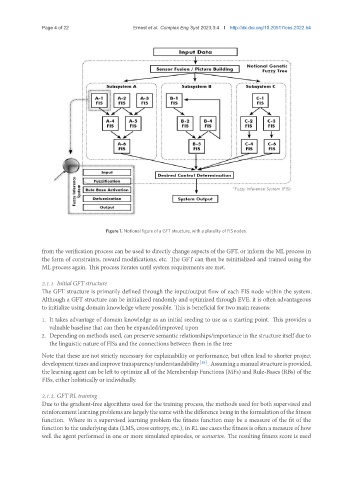

Figure 1. Notional figure of a GFT structure, with a plurality of FIS nodes.

from the verification process can be used to directly change aspects of the GFT, or inform the ML process in

the form of constraints, reward modifications, etc. The GFT can then be reinitialized and trained using the

ML process again. This process iterates until system requirements are met.

2.1.1. Initial GFT structure

The GFT structure is primarily defined through the input/output flow of each FIS node within the system.

Although a GFT structure can be initialized randomly and optimized through EVE, it is often advantageous

to initialize using domain knowledge where possible. This is beneficial for two main reasons:

1. It takes advantage of domain knowledge as an initial seeding to use as a starting point. This provides a

valuable baseline that can then be expanded/improved upon

2. Depending on methods used, can preserve semantic relationships/importance in the structure itself due to

the linguistic nature of FISs and the connections between them in the tree

Note that these are not strictly necessary for explainability or performance, but often lead to shorter project

developmenttimesandimprovetransparency/understandability [18] . Assumingamanualstructureisprovided,

the learning agent can be left to optimize all of the Membership Functions (MFs) and Rule-Bases (RBs) of the

FISs, either holistically or individually.

2.1.2. GFT RL training

Due to the gradient-free algorithms used for the training process, the methods used for both supervised and

reinforcement learning problems are largely the same with the difference being in the formulation of the fitness

function. Where in a supervised learning problem the fitness function may be a measure of the fit of the

function to the underlying data (LMS, cross entropy, etc.), in RL use cases the fitness is often a measure of how

well the agent performed in one or more simulated episodes, or scenarios. The resulting fitness score is used